Information Center

The Definitive Guide to Cloud Storage Pricing

It’s no secret that the cost of ownership model for on-premises storage is nothing like the way enterprise cloud storage pricing works.

What’s more, the process of untangling the pricing of cloud storage services can cause confusion and frustration. With all the talk about the lower costs of cloud storage (outside of variable egress fees), how do you figure out what’s best for your organization?

We routinely see people express confusion about cloud storage pricing. So, I hope to provide some clarification to help you have a better understanding of cloud storage pricing, so you know what to expect.

The facts are that cloud storage pricing is complicated, it doesn’t always cost less than on-premises options, and egress charges are unlikely to be a budgeting problem for you.

Despite the complexities of cloud storage pricing, the good news is that the object storage offerings from Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) all have similar pricing models for cloud storage. So, once you get familiar with the approach to cloud storage pricing, you’ll be in a better position to evaluate the cost-effectiveness of all the major cloud providers based on your organization’s needs.

Cloud storage cost variables

There are several enterprise cloud storage cost variables you need to be aware of, including:

- Storage. These costs are typically expressed as a cost per gigabyte per month or a price per terabyte per month or year. Each cloud provider has different tiers of storage that offer vastly different cost, performance, and availability levels.

- Storage operations. API-based actions incur minimal costs, typically per 10,000 procedures. However, charges for storage operations can add up to meaningful amounts when you’re performing numerous tasks on high-object-count workloads.

- Storage data transfer. A per-gigabyte fee to read data, typically varying per the specific storage tier(s).

- Egress bandwidth. This is more a network activity cost factor than a storage price variable. There’s a fee for downloading data from any cloud storage where the payload delivers outside of the cloud region. Egress costs do not incur when you access data from resources residing within the same region.

Storage costs are the simplest math in the cloud pricing equation

Let’s look at the different cloud storage levels, as follows:

- Performance tiers. This includes the Hot or Premium tier of Azure Blob Storage, or the S3 Standard tier in AWS. Performance tiers are designed for active or hot data that’s highly-transactional, offering the best availability service-level agreements (SLAs), lowest activity costs, and lowest latency. However, these tiers also come with the highest prices for object storage.

- Low-touch tiers. This includes the S3 Standard – Infrequent Access tier from AWS, or Microsoft Azure’s Cool tier. Low-touch tiers are for data requiring ready access, but the information is mostly inactive. Low-touch tiers offer availability and low-latency retrieval times with lower storage costs. However, activity costs increase over the performance tiers.

- Archive tiers. This includes GCP’s Coldline, Glacier from AWS, or Microsoft’s Archive tier. The archive tiers in the cloud are actually often physical tape libraries, so retrieval times aren’t immediate, but the storage costs are incredibly low. You can wait for hours for a recall to complete, and even expedite a request for a fee. Archive tiers are geared for workloads that are very unlikely to require access but indeed require long-term storage. Just don’t be too aggressive with what you move to archive tiers because they have the highest activity costs and the slowest retrieval response times.

Generally, these are the three main categories of storage tiers, though we anticipate providers will release additional variations and options over time.

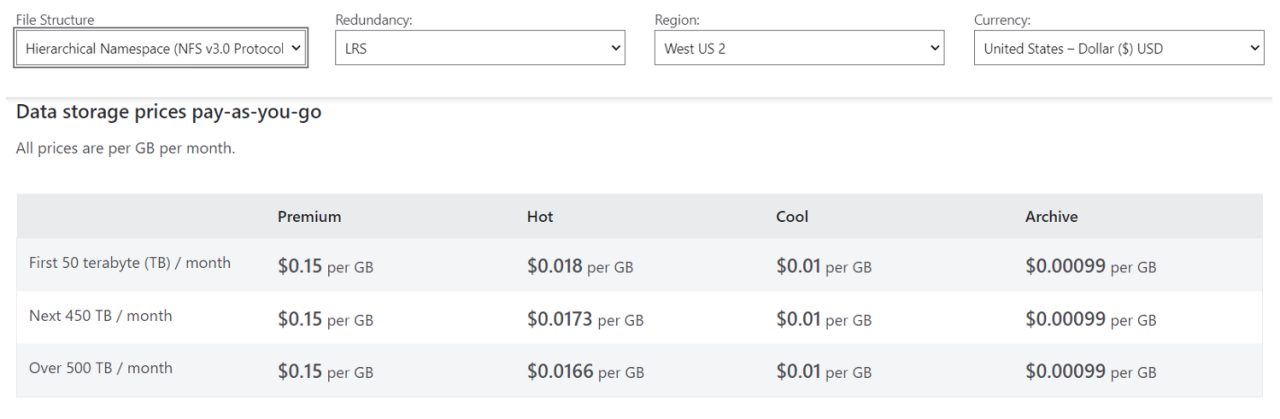

Among the major cloud providers today, you’ll find volume discount prices for some of the performance tiers (i.e., the monthly cost per gigabyte decreases as your data volumes passes certain thresholds). However, flat rate fees are all you’ll find with the low-touch and archive tiers. As an example, here’s a look at Microsoft’s object storage pricing for their West US 2 region:

The math for storage is simple: Let’s say we have 2,250 TB of data in Azure, spread across the Hot (600 TB), Cool (200 TB), and Archive (1,450 TB) tiers. Here are the monthly storage costs we should expect from this scenario in the West US 2 region:

Tier |

Data volume |

Monthly total |

Hot, first 50 TB (@ $0.018/GB) |

50 TB |

$900.00 |

Hot, next 450 TB (@ $0.0173/GB) |

450 TB |

$7,785.00 |

Hot, over 500 TB (@ $0.0166/GB) |

100 TB |

$1,660.00 |

Cool (@ $0.01/GB) |

200 TB |

$2,048.00 |

Archive (@ $0.00099/GB) |

1,450 TB |

$1,469.95 |

$13,862.95 |

The bulk of the storage capacity in the archive tier is quite inexpensive, while the highest cost factor is the data on the performance tier. In practice, we’d take a hard look (using file analytics and activity auditing) to see if much of the data on the “Hot” tier could move to a more cost-efficient tier. Assuming we could move 80% of the data on Hot and Cool tiers to the lower-cost Archive tier, the cost profile would change significantly:

Tier |

Data volume |

Monthly total |

Hot, first 50 TB (@ $0.018/GB) |

50 TB |

$900.00 |

Hot, next 450 TB (@ $0.0173/GB) |

70 TB |

$1,211.00 |

Hot, over 500 TB (@ $0.017/GB) |

0 TB |

$0.00 |

Cool (@ $0.01/GB) |

40 TB |

$400.00 |

Archive (@ $0.00099/GB) |

2,090 TB |

$2,069.10 |

$4,580.1 |

Storage operations fees are nobody’s friend

Moving data around isn’t free. Activities like writing, reading, tiering, and fetching the properties of items are examples of cloud storage operations that have associated fees.

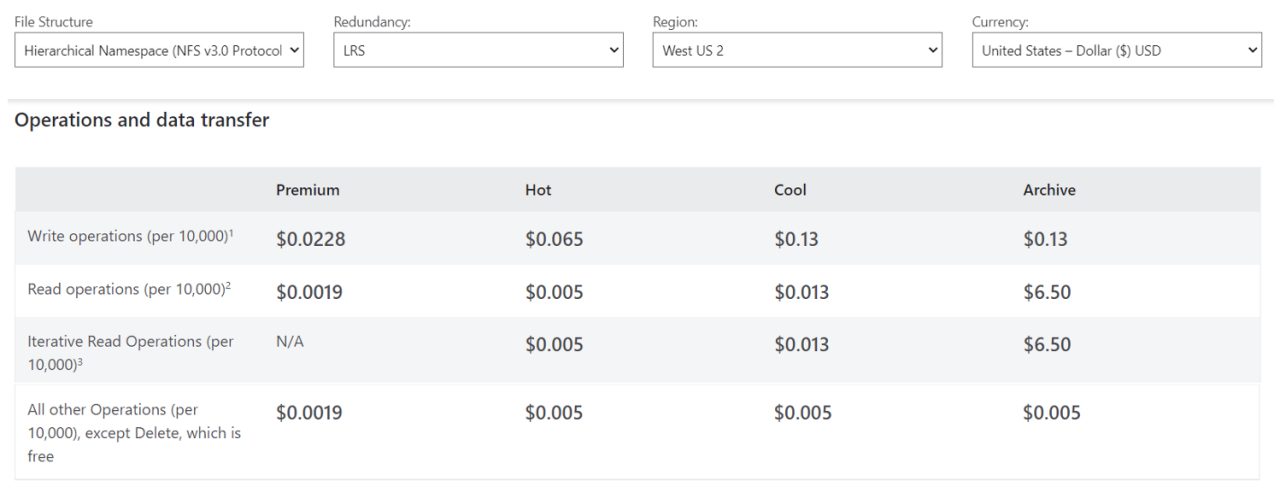

Using the pricing from the West US 2 Azure region, here’s a glimpse of the charges for storage operations (price is per 10,000 operations):

You can see that the lower storage cost tiers have the higher activity costs.

At first glance, storage operation fees aren’t difficult to understand. However, modeling them can be tricky because you need to understand precisely what an application is doing with the data to have an idea of the actual operations activity that will unfold in practice.

For example, large files will incur multiple operations. If objects are written using a stream, there will be at least two write operations (PutBlock and PutBlockList) for each item. In a scenario where an application writes each object, subsequently performs a data integrity check, reads each object for indexing, and then changes the tier, you can wind up with several write and read operations per item for the initial seeding alone.

With the operation costs metering by transaction count, let’s look at two examples that illustrate the cost difference given the workload in question.

(Note: Veritas Alta™ SaaS Protection minimizes both storage operations and bandwidth usage by only backing up what has changed since the previous backup job.)

Example 1: Storage operation costs for a large file workload

A large file workload could be backups, video, or LIDAR images. Let’s assume 200 TB with an average file size of 1 GB for an object count of 200,000 items. For this example, we’ll presume each object incurs four write operations and two read operations for seeding into cloud storage.

Here are the resulting storage operation costs assuming the Hot tier:

Hot Tier |

Operations |

Total |

Write operations (@ $0.065 per 10k) |

800,000 |

$5.20 |

Read operations (@ $0.005 per 10k) |

400,000 |

$0.20 |

$5.40 |

And here are the resulting storage operation costs assuming the Cool tier:

Cool Tier |

Operations |

Total |

Write operations (@ $0.13 per 10k) |

800,000 |

$10.40 |

Read operations (@ $0.013 per 10k) |

400,000 |

$0.52 |

$10.92 |

Obviously, the above fees are negligible. But what happens with a different workload consisting of smaller files?

Example 2: Storage operation costs for a small file workload

A small file workload could be Internet of Things (IoT), genomics, or email. This time let’s assume an equivalent 200 TB but with an average file size of 150 KB for an object count of 1,431,655,765 items. We’ll presume that each object incurs the same four write operations and two read operations for seeding into cloud storage.

Here are the resulting storage operation costs assuming the Hot tier:

Hot Tier |

Operations |

Total $ |

Write operations (@ $0.065 per 10k) |

5,726,623,060 |

$37,223.05 |

Read operations (@ $0.005 per 10k) |

2,863,311,530 |

$1,431.66 |

$38,954.71 |

And here are the resulting storage operation costs assuming the Cool tier:

Cool Tier |

Operations |

Total $ |

Write operations (@ $0.13 per 10k) |

5,726,623,060 |

$74,446.10 |

Read operations (@ $0.013 per 10k) |

2,863,311,530 |

$3,722.30 |

$78,168.40 |

The numbers in this second example look a little scary for budget-conscious organizations.

But before you run to the hills with your high-object-count workloads, keep in mind that the long-term storage savings from the lower cost tiers eventually works out to your advantage.

You’ll need to run the numbers to see how long it takes for you to break even with the initial seeding spike. In most business cases we see, despite the initial operation costs, there is a compelling long-term cost advantage to moving workloads from on-premises infrastructure to a cloud storage service, especially on the lower-cost tiers.

With our solution, Veritas Alta SaaS Protection, we use a number of strategies to mitigate activity costs for our clients, including inline deduplication and compression. In some scenarios, policy-based containerization of smaller objects that are suitable for archiving works to significantly reduce the object count.

In general, SaaS Data Protection optimizes your cloud storage footprint by applying analytics and policy-driven tiering that leaves small objects on performance tiers while moving less active large objects to infrequent or archive tiers automatically. With this approach, we can minimize storage operations while maximizing storage cost efficiency.

Not all egress is equal: Data transfer is negligible

There are two types of egress:

- Retrieving data from storage

- Downloading data out of the cloud region

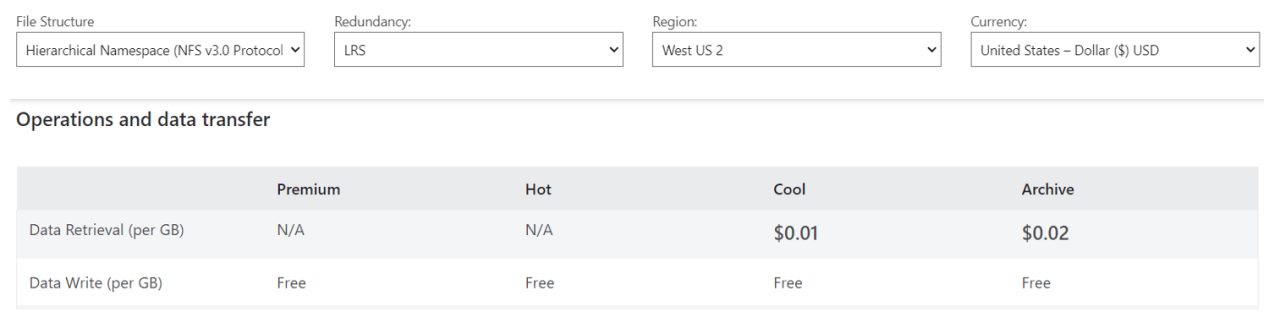

Retrieving data is a storage-level data transfer cost factor, whereas, downloading data out of the cloud region is a network bandwidth data transfer cost. We’ll get to network egress costs a bit later, but the good news is that storage-level data transfer costs are negligible.

We rarely see retrieval data transfer costs exceed $20 in a month. For example, accessing any amount of data from Hot has no data transfer cost, accessing 5 TB would cost around $50 if retrieved from Cool, or $100 if accessed from archive.

(Note: Veritas Alta SaaS Protection does not use the archive tier – we wanted the higher storage performance for our solution.)

Continuing with the West US 2 Azure price example, we can see there is no ingress data transfer cost, and we have minor per gigabyte fees for data retrieval on specific storage tiers:

(Note: While Azure costs vary per region, Veritas Alta SaaS Protection costs are the same in all regions.)

Putting egress bandwidth in perspective

Too often we hear from industry analysts and pundits that cloud storage is cheap but that egress costs are the hidden danger. Storage hardware vendors often call out the egress costs as a reason to avoid cloud storage.

Indeed, egress charges aren’t something you think about when your data resides on your infrastructure.

However, we should put egress bandwidth costs in perspective by quantifying them before assuming they’re a dealbreaker.

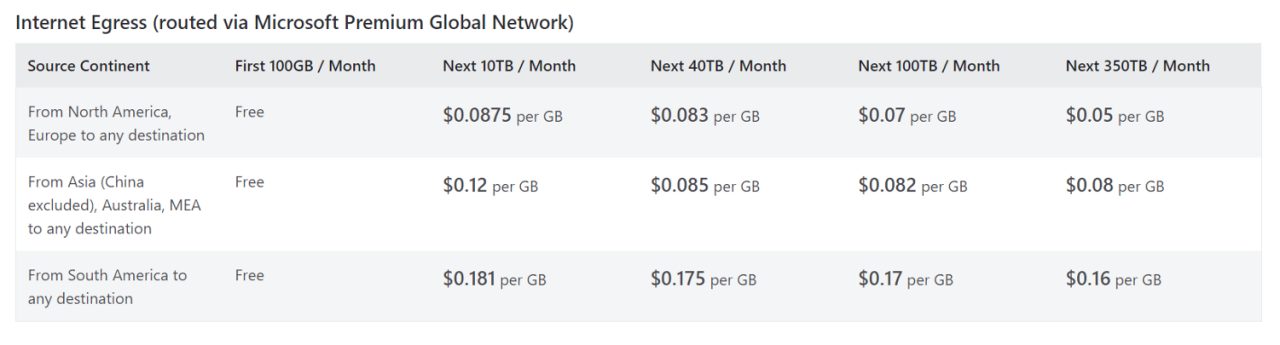

First, here’s a look at how pricing works for egress data transfer:

(Prices shown are based upon routing network traffic via the Microsoft Premium Global Network. Not using this option has a lower cost, but a similar pricing structure.)

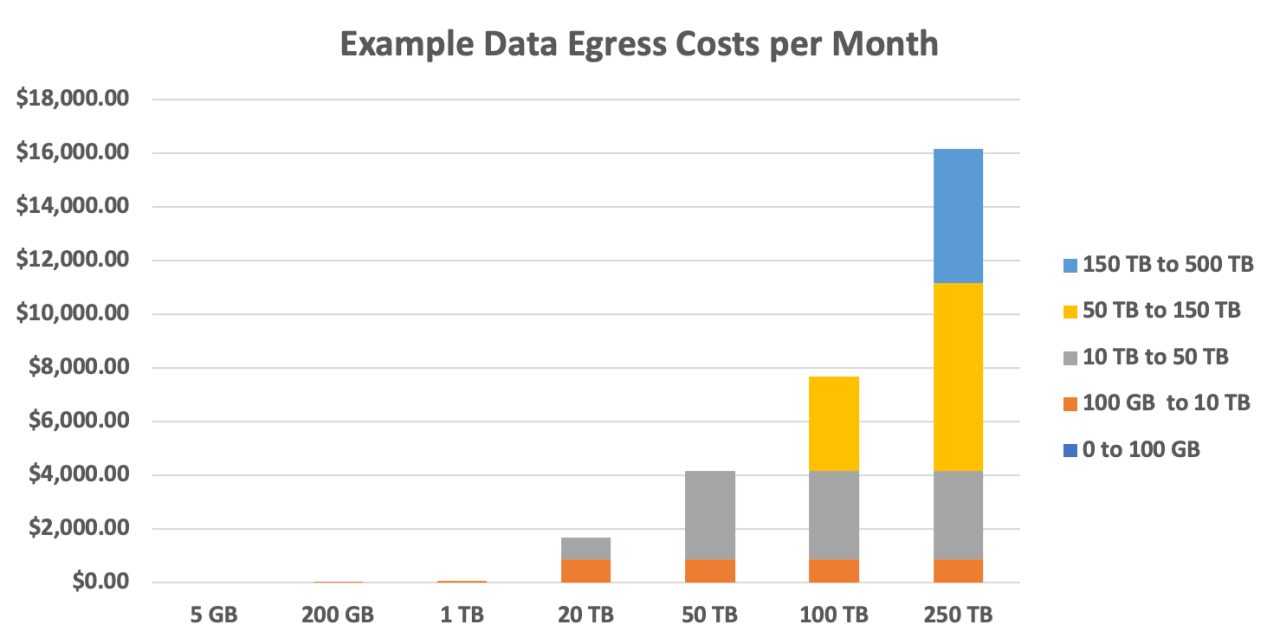

Like with storage, Azure's data egress fees will vary depending on the region. The costs are volume discounted and based on the data size (in gigabytes):

We can see that egress on smaller amounts of data is an insignificant cost factor. However, downloading more than 10 terabytes inside of a month becomes notable.

The thing to keep in mind is that most data is low touch, if not completely inactive.

(Note: If you choose the Veritas-hosted deployment model for Veritas Alta SaaS Protection, you won't see add-on charges for egress.)

Because Veritas Alta SaaS Protection provides built-in file analytics for the storage platform, customers have good visibility into their retrieval activity. In almost all cases, a small percentage of any given data set is accessed each month. One customer, for example, uses Veritas Alta SaaS Protection backup and seamless tiering behind a high-transaction on-premises application which egresses around one terabyte monthly, representing 1.4% of their storage footprint with Veritas. But that is on the higher end. More commonly, we see customers with scenarios like this: more than 500 terabytes of data in storage and only 300-500 GB on average egresses each month (that is 0.0005% to 0.001% of the overall data set).

In reality, egress is typically small, even when we are cloud tiering multiple large-scale file servers on-premises (because recent data is cached locally, and users rarely access things older than 30 days).

However, there are circumstances where egress costs are front and center. One instance is when you want to create a segregated cloud backup copy of your storage account to a separate cloud region. If you have 100 TB in storage and want to enable a geo-backup, this may mean a one-time egress of 50-60 TB (post deduplication and compression).

While it is good to understand egress costs at scale, in practice we only see it as a factor in geo-redundant storage replication or backup, or in rare configurations that require replication of data sets to an external service. (For example, using the Extra Data Backup add-on option of Veritas Alta SaaS Protection.) Otherwise, because most organizations only interact with a small percentage of the data in storage, egress is typically an insignificant cost.

Cloud storage pricing nuances

Here are some other cloud storage details to keep in mind when planning your budget:

Archive storage tiers have usage fees that can nullify their cost advantages

The archive storage tiers have incredibly low pricing—in some cases, less than one tenth of a penny per gigabyte monthly (i.e., $0.00099/GB/mo.). However, these tiers are for data you need to keep for long periods and are highly unlikely to retrieve.

While you can recover your data, doing so from an archive tier involves moving the data up to a “cool” or “infrequent access” tier. The thing to watch for is that rehydrating within a specified period after the initial write is considered an early access/deletion from the archive and this comes with a cost penalty.

For example, AWS stipulates that data placed to their archive tier has a minimum 90 days of storage, and access before this time incurs a pro-rated charge equal to the storage charge for the remaining days. Similarly, Microsoft Azure’s archive tier has this concept but for a minimum of 180 days of storage before no early access fee applies.

So, make sure to store only low-touch or cold long-term retention workloads to these archive tiers, and you’ll do well to mitigate early access/deletion fees.

(Note: These high access fees are not an issue with Veritas Alta SaaS Protection as it doesn’t use the Archive tier.)

No ingress data transfer fees

Cloud providers want to make it attractive to move your data into their storage services, so you won’t find ingress data transfer costs at either storage or network levels.

Don’t forget about redundancy levels

It’s easy to focus on the lower pricing you get with locally redundant storage (all prices referenced in this post are for local redundancy only). However, if you need geographic replication or backup of your data, then you should plan accordingly because there is bump up in cost. While you’re planning for geo-redundancy, remember that you need to include the geo-replication bandwidth cost which varies by region (usually around $0.02 per gigabyte).

Prices vary by cloud region/geography

The major cloud providers have data centers in numerous countries which means they’re running costs in multiple currencies. You’ll find that a specific storage service from the same cloud provider will have price variance between regions, in some cases with a significant difference. Moreover, you should not be surprised to find that all cloud regions by the same provider are not equal (i.e. a storage service may not be available in certain areas).

Our recommendation is never to assume the pricing and services available from one region to be consistent with another. Always take the time to verify regional prices before you submit a budget plan.

(Note: With Veritas-hosted deployments of Veritas Alta SaaS Protection, costs are identical across all Azure regions.)

Cost modeling your workloads in Veritas Alta SaaS Protection

If you’re looking to use Veritas Alta SaaS Protection’s customer-hosted option, we can help you figure out what you need and how much it’s going to cost. Contact us if you want a cost estimate for your scenario. Our pricing calculator can help you model cloud storage spend, accurately depicting the cost of your subscription over multiple years.